Using Electronics to Teach Software Architecture

Three months ago, I was teaching a class on Fundamentals of Software Engineering. This course has a module on Software Architecture, which I typically teach from the Carnegie Mellon SEI perspective. After teaching it a few times, I had been thinking about better ways to transition students from the “computer-science-first”, code-driven perspective they have when they get to this course, to the more abstract level of thought desirable to properly grasp and reason about software architecture in a structured fashion. Read on to find out how I reached out to another engineering discipline to achieve this.

Structured Software Architecture

Before embarking on my master’s degree in Software Engineering, the closest I had come to attempt to reason about “architecture” (at least from a conceptual and documentation point of view) was Larry Wall’s 2003 State of the Onion keynote. As is typically the case with geniuses, behind all the madness Larry makes some really good points about ambiguity and notation overloading in architectural descriptions.

Now, shortly after I saw that, I found myself in England with an incredibly slow, lonely and long Christmas holiday ahead of me. Part of my copious time was put to good use reading through Software Architecture In Practice, the “bible” of structured software architecture. Back then it was in its second edition, and only (relatively) recently has the third edition being released. To me, one of the reasons for this decade-long hiatus is the soundness of the concepts put forth in the book.

(Side note: ever since my MSc days, I’ve taken great pains to ensure I use “structured” as opposed to “formal” whenever the latter implies mathematics-based theory, and whenever I feel the need to distance what I’m espousing from empirical methods such as those ridiculed by Larry Wall in the keynote linked above; but this is probably more of an antics of mine than an actual necessity. Please accept my apologies.)

From that book, as well as the equally relevant Applied Software Architecture, the IEEE 1471 standard, and to a lesser extent other sources, I have extracted a basic vocabulary and conceptual framework, which a decade later I can attest results incredibly valuable to explore software architectures (and in fact many other types of architectures; I shall write about that on a later post). This basic vocabulary consists of these six key elements: components, ports, connectors, roles, connections and properties. (One could argue for a seventh element, protocols, but I believe that can generally be expressed as properties of other elements, particularly roles)

Without attempting to turn this post into a detailed primer on structured software architecture, components are “things” within different categories; clearly encapsulated objects that can mostly be treated as black boxes, except for explicitly, well-specified ports that constitute externally visible elements of components, which are interesting within a given aspect and level of the detail for the system under construction.

Very important in this discussion are connectors. Instead of the ambiguous, semantically insufficient lines of the typical “box and line” architecture diagram, connectors are first-class citizens in this vocabulary. They specify controlled ways by which components interact (via their ports).

The richness of the model can now be made apparent; using the SEI terminology, architectures are interesting from at least three different viewpoints:

-

Module decomposition: to reason about the static aspects of the software. I like to say this is how the software looks like within the developers' minds when looking at it through an IDE. Components here could be classes, packages or modules; ports could be references to the components' identities (like Java’s “MyClass.class” construct), or parts of the public interface of the component; connectors then describe relationships such as “inheritance” (among components' identity ports, so to speak) or “depends”/“invokes” (between methods of the components' public interface)

-

Component-and-connector: to reason about the runtime aspects of the software. I liken this to “seeing” software through a profiler or debugger: you are thinking in terms of objects or instances, processors and threads. These would be the components. Connectors in this viewpoint represent concepts such as shared access, runtime resolution and invocation of services, etc.

-

Allocation: to reason about the deployment of the software. This is like the understanding you get from analyzing the application’s build and installation scripts or procedures. Components are binary pieces of the software, processing nodes and software infrastructure elements such as Web or database servers; connectors show how these elements interact with each other, including which bits of software get deployed to which infrastructure elements

Note that components are not therefore “absolute” or final partitions of the application; instead, the same application is variously split into sets of more or less orthogonal components, according to the viewpoints under analysis, although of course there must be a correspondence between components belonging to different viewpoints. This is quite similar to having different diagrams and schematics of the civil architecture of a building (electrical, structural load, etc.), which must all relate to each other; the same thinking can be applied to biological systems (eg. humans beings, with respiratory, digestive, circulatory and nervous subsystems), or cars (with fuel, air conditioning, electrical and many other subsystems). In all cases, you can observe a pattern of describing subsystems with elements whose types can be equated across all subsystems (at an appropriately high level of abstraction), and whose instances must bear certain correlation among themselves in controlled and specific ways.

Roles are attached to connectors, and define specific details that components being connected must observe, typically through their ports. Connections detail specific instances of connectors being bound to specific instances of components through their ports, enforcing role semantics in the process. Finally, properties are structured attributes attached to any of the previous elements of the vocabulary in order to augment and specify them. In their simplest embodiment, properties may simply be name-value pairs used to annotate any of the previously described elements.

To round this brief overview on structured architecture, I will mention that there is a process, language and notation to progressively break down a system into elements of the vocabulary; and the critical notion that the starting point, and driving concerns, in carrying out this break down, are the *non-functional *requirements, as opposed to the functional requirements of the system (although an important part of the architectural design effort is ensuring that the architecture properly considers all functional requirements by allotting them to specific components).

Making the Novice Make Sense of This

If you are reading this, you are probably a software practitioner experienced in one or more roles – or maybe not; and you may have been exposed to this concepts before – or not. If you are experienced and didn’t understand much, I probably made a lousy job – but I hope you’ll understand my quandary having to provide a basic understanding of the topic in a very short amount of time to my students. If you are experienced in software development, regardless, to an extent, of how much you understood, you can probably see this can be valuable to analyze a system’s design and labor distribution before getting started with actual code writing – and you can also probably see that this is even harder to grasp for a novice student/developer. If you are a novice student/developer, you are most certainly scratching your head trying to map the above into any kind of useful activity or artifact within your worldview of software engineering.

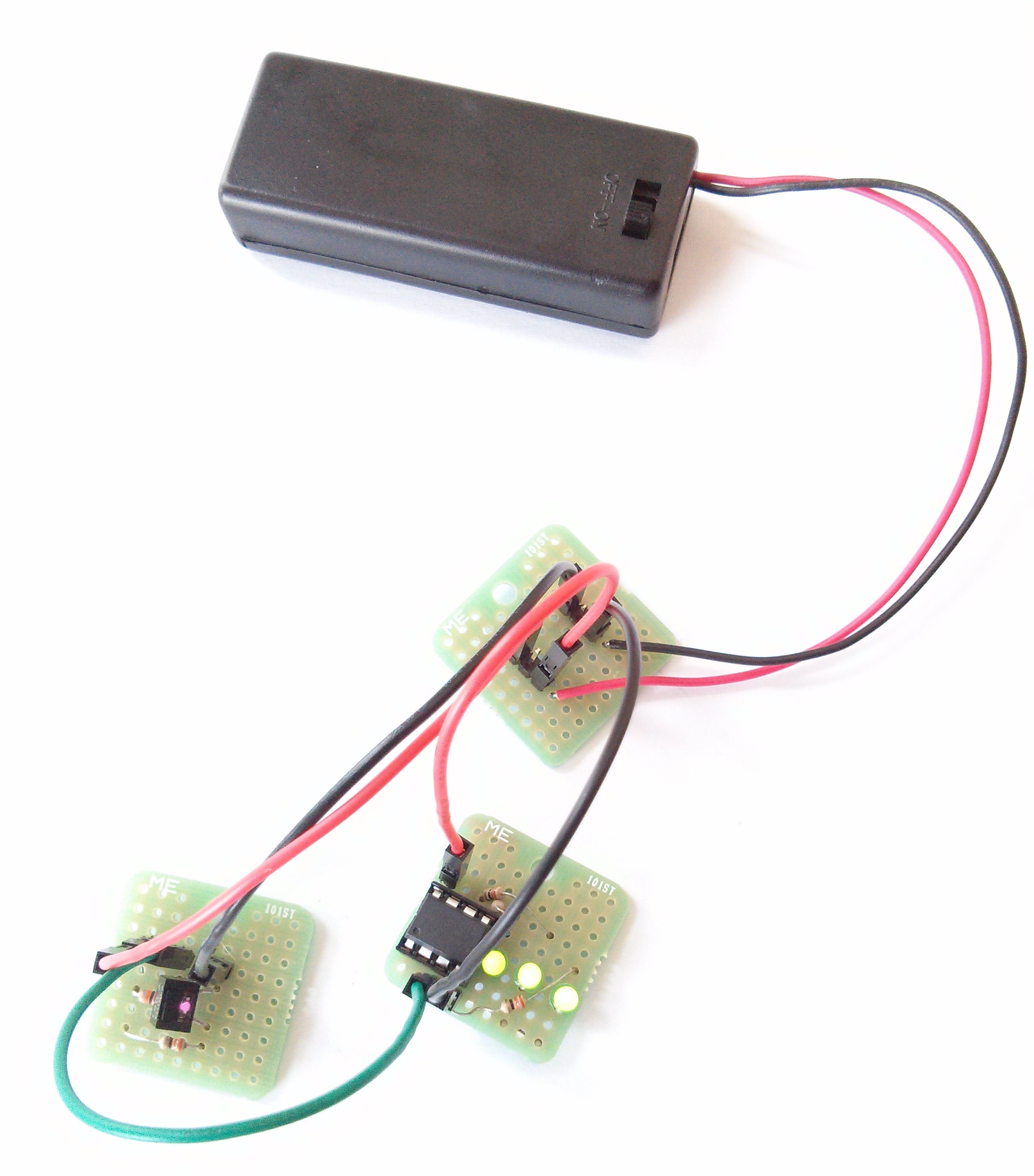

In order to mitigate these issues (particularly, I think, my own limitations as a teacher), I resorted to using electronic components to introduce these concepts. Specifically, I set out to build small devices that fell into one of two categories: sensors (devices that can measure some real-world physics phenomenon, especially one not readily perceivable or measurable by humans, and relay it through a well-defined electrical protocol), and displays (devices that can traduce sensors' outputs into some physical phenomenon that can be perceived and measured by humans). I could have gotten something like the awesome littleBits, but self-assembly was both cheaper and challenging in its own. If I had to do it again, I’d probably go with something similar to Sparkfun’s recently announced Qtechknow Learning Sensors Kit, probably tweaked to make the processing and display bits more modular. In any case, my bits, while not particularly elegant or functional, served the purposes I was looking for.

I built four sensor boards and four display boards as follows:

-

Sensors

- Button: generates a digital pulse based on a button being pressed

- Photosensor: generates an analog signal based on varying amounts of light hitting the sensor

- Tilt: generates a digital pulse based on the board being tilted

- Distance: generates an analog signal based on an infrared-reflective object’s distance to the sensor

-

Displays

- Indicator: translates a digital pulse into a blinking LED

- Color: translates a digital pulse into a new random color of an RGB LED

- Bar: translates an analog pulse into a bar-graph like display by powering between zero and three LEDs based on the pulse value

- Alternator: translates a digital pulse into a toggle between a red and a green LED being lit

I also assembled battery packs along with the devices, and produced a one-page document with brief descriptions and pin-outs for each device.

A circuit made of the light sensor coupled to the bar display.

A circuit made of the light sensor coupled to the bar display.

The Experiment and Its Aftermath

In class, I divided students into four groups and gave each group a sensor, a display, a battery pack, jumper wires and a copy of the documentation – and set them loose for a few minutes. Some of them had previous experience with electronics; some didn’t. All teams managed to build small assemblies allowing them to excite their sensors and witness changes in their displays. I then motivated them to exchange parts and reconfigure their circuits. Some students raised concerns about connecting analog sensors to digital displays or vice versa, especially because there were not equal amounts of analog sensors and displays. I gave them sufficient time to try out all possible combinations of sensors and displays. They found it fun enough to go for it.

Once the exercise was over, I asked them what made possible this variety of configurations and changeability. They nailed the basic reasons pretty quickly: standard physical interfaces, well-specified electrical protocols, encapsulated components, uniform pin-outs… We also touched on the semantics of “fail-safe” design made evident when connecting the analog sensors to the digital displays or the other way around.

I then asked them the crucial question that was the point of the entire exercise:

What would have happened if I had given you PCs and bits of software to recombine instead?

It caused a deep effect on them. Some said the exercise would have taken much longer, on two accounts. On one hand, if the documentation turned out to be insufficient, they would have needed access to the source code and reading it critically for a while in order to understand its behavior. On the other hand, integrating or “gluing” software components is mostly done by writing *more *software, as opposed to configuring or otherwise “plugging” components in a more efficient manner (I do realize dependency injection and other techniques help software approach the electronics example here, but am afraid this is still neither the first nor the most commonly sought solution).

Others offered insight on the amount of testing required, the probability of introducing errors, and the need to explicitly code or consider a few scenarios (such as “fail-safe” semantics) that they had otherwise taken for granted when dealing with discrete electronic components.

I then guided them through a discussion of how software could be split up into components over which orthogonal but interrelated analysis could be carried out before much coding was done, by focusing on a handful of externally visible attributes, much like they were able to reason about discrete electronic components along a few different yet intertwined aspects (mechanical interface, electrical interface, analog vs digital protocols, etc.). This was the key to transition them into seeing software as high-level boxes selected according to different criteria and connected in ways relevant to the criteria or aspect under analysis. I was then able to introduce them to the structured software architecture vocabulary I outlined above and expand a bit on the drivers and processes defined to instantiate architectures based on the vocabulary.

In general, I think the experiment was highly successful, based on the reaction from students and the quality of the architectural work evidenced later in the course as compared to previous classes. I will keep practicing and honing the approach (my next planned opportunity takes place in September) and probably report about how it goes. In the short term, I am presenting this as part of the 9th International Congress on Scientific Research taking place this week in the Dominican Republic, under the academic/teaching research track. Do not hesitate to let me know in the comments below if you would like more details about the hardware, the software it runs or the presentation I’ll deliver at the congress – it will be in Spanish, but I can translate it to English if there’s enough interest.